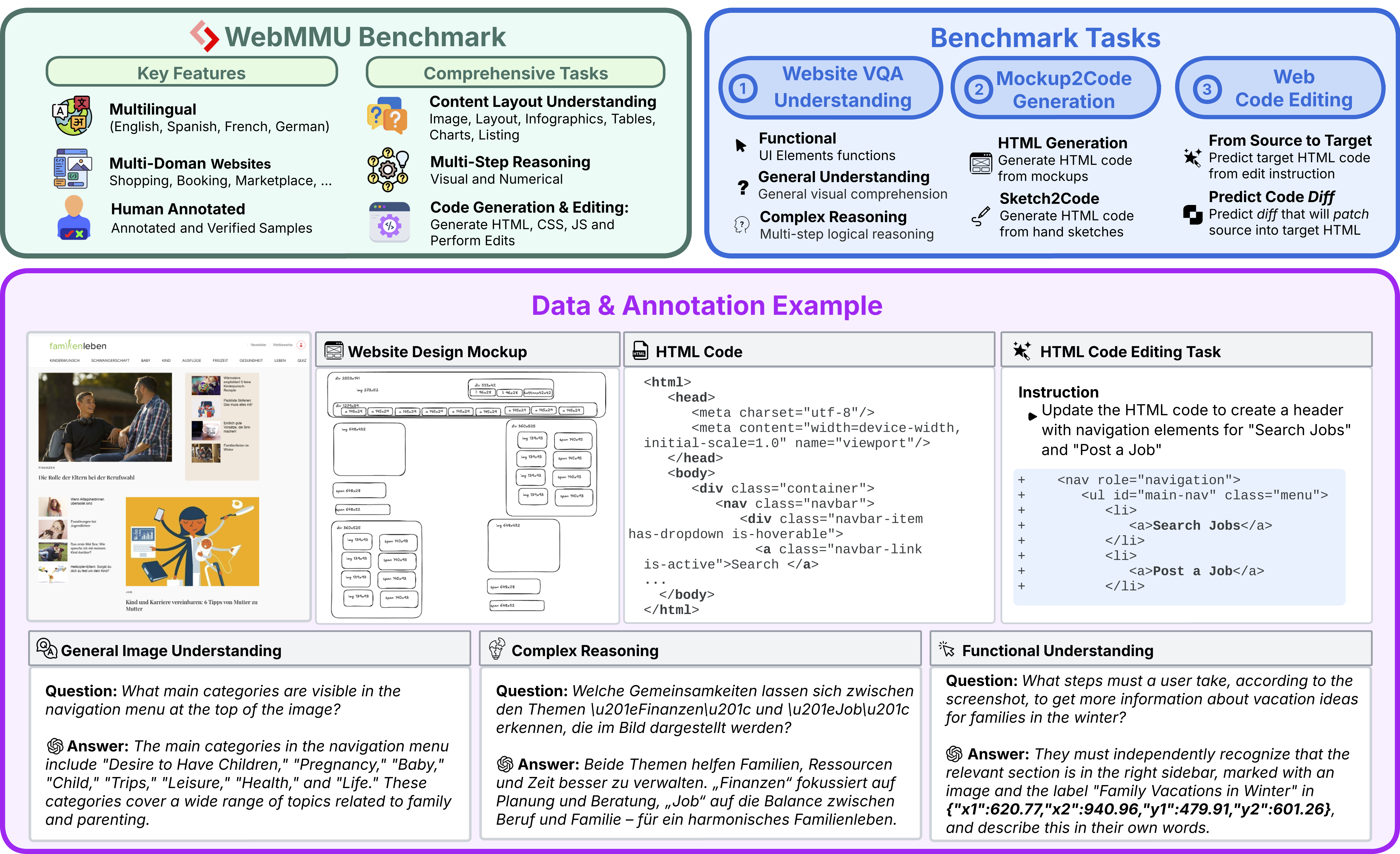

WebMMU: A Multimodal, Multilingual Benchmark for Website Understanding & Code Generation

WebMMU addresses a critical gap in AI evaluation: how well can models understand and manipulate real websites? Current benchmarks often use simplified or synthetic data, masking the true challenges of real-world web interaction.

WebMMU evaluates three fundamental capabilities that AI systems need for real-world web interaction. Each task targets specific skills that are essential for building intelligent web agents and development tools.

Ability to understand and reason about website content and functionality through visual analysis.

Example questions: "Which button should a user click to view their order history?" or "Sum the prices of all items in the shopping cart."

Measures: Spatial reasoning, understanding UI hierarchy, and connecting visual elements to functionality.

Ability to translate visual designs into functional HTML/CSS code that accurately reproduces the intended layout and styling.

Example: "Generate code for this login page sketch, preserving layout and style."

Measures: Understanding design intent, maintaining visual fidelity, and producing clean, maintainable code.

Ability to make precise, functional modifications to existing website code based on user requirements.

Example: "Add a dark mode toggle to the navbar and ensure all text remains readable."

Measures: Understanding code structure, preserving functionality, and making targeted changes without unintended side effects.

WebMMU's dataset is built with rigorous quality standards to ensure it reflects real-world complexity.

Our dataset spans diverse real-world scenarios: e-commerce platforms, government websites, educational portals, news sites, and more. This broad coverage ensures that models are tested on the full spectrum of web interfaces they might encounter in practice. Each example undergoes a three-stage quality assurance process involving 127 professionals. Each example is authored by expert annotators with domain knowledge, then reviewed by multiple professionals to ensure accuracy and relevance.

| Task | English | Spanish | German | French | Total |

|---|---|---|---|---|---|

| Website Images | 392 | 133 | 130 | 131 | 786 |

| WebQA | 1,476 | 484 | 379 | 456 | 2,795 |

| Mockup2Code | 180 | 93 | 85 | 78 | 436 |

| Code Editing | 165 | 75 | 67 | 68 | 375 |

| Total | 2,213 | 785 | 661 | 733 | 4,392 |

Browse through real samples from each task to understand the diversity and complexity of the WebMMU benchmark.

Browse through real samples from each task directly on Hugging Face. Each dataset contains authentic examples with expert annotations and quality assurance.

Explore real-world code editing examples where models must make precise modifications to existing HTML/CSS/JavaScript code based on user instructions.

Browse hand-drawn and digital mockups that models must convert into functional HTML/CSS code, testing visual-to-code translation capabilities.

Explore complex, visually-grounded questions about real website screenshots, testing models' ability to understand and reason about web interfaces.

WebMMU's comprehensive evaluation reveals significant gaps in current AI capabilities for real-world web interaction. Our results provide actionable insights for researchers and developers working on multimodal AI systems.

WebMMU uses a rigorous evaluation protocol combining LLM-as-Judge scoring (validated by human annotators with 89–91% agreement) and automatic metrics (BLEU, TreeBLEU, visual similarity). This multi-faceted approach enables fine-grained analysis of model performance across reasoning, grounding, and code correctness.

WebQA evaluates models on their ability to answer questions about real website screenshots across three categories: 🧠 Reasoning (complex logical thinking), ⚙️ Agentic UI Actions (navigation and interaction), and 🔎 Content Understanding (basic information extraction). Most models struggle with complex reasoning and agentic UI actions, especially outside English.

Model accuracy (%) by question type and language. Best and runner-up models per size category are bold and underlined.

| Model | English | French | German | Spanish | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 🧠 | ⚙️ | 🔎 | 🧠 | ⚙️ | 🔎 | 🧠 | ⚙️ | 🔎 | 🧠 | ⚙️ | 🔎 | |

| Claude3.5 Sonnet | 51.4 | 3.7 | 64.1 | 53.0 | 12.7 | 51.2 | 26.9 | 15.6 | 31.6 | 63.8 | 15.9 | 41.9 |

| Gemini2.0 Flash | 44.3 | 1.2 | 59.2 | 41.6 | 9.0 | 52.8 | 18.2 | 12.8 | 29.1 | 46.1 | 12.0 | 36.1 |

| QwenVLSeventyTwoB | 23.6 | 4.3 | 53.7 | 16.9 | 13.9 | 54.5 | 15.3 | 17.5 | 36.2 | 29.1 | 12.7 | 41.0 |

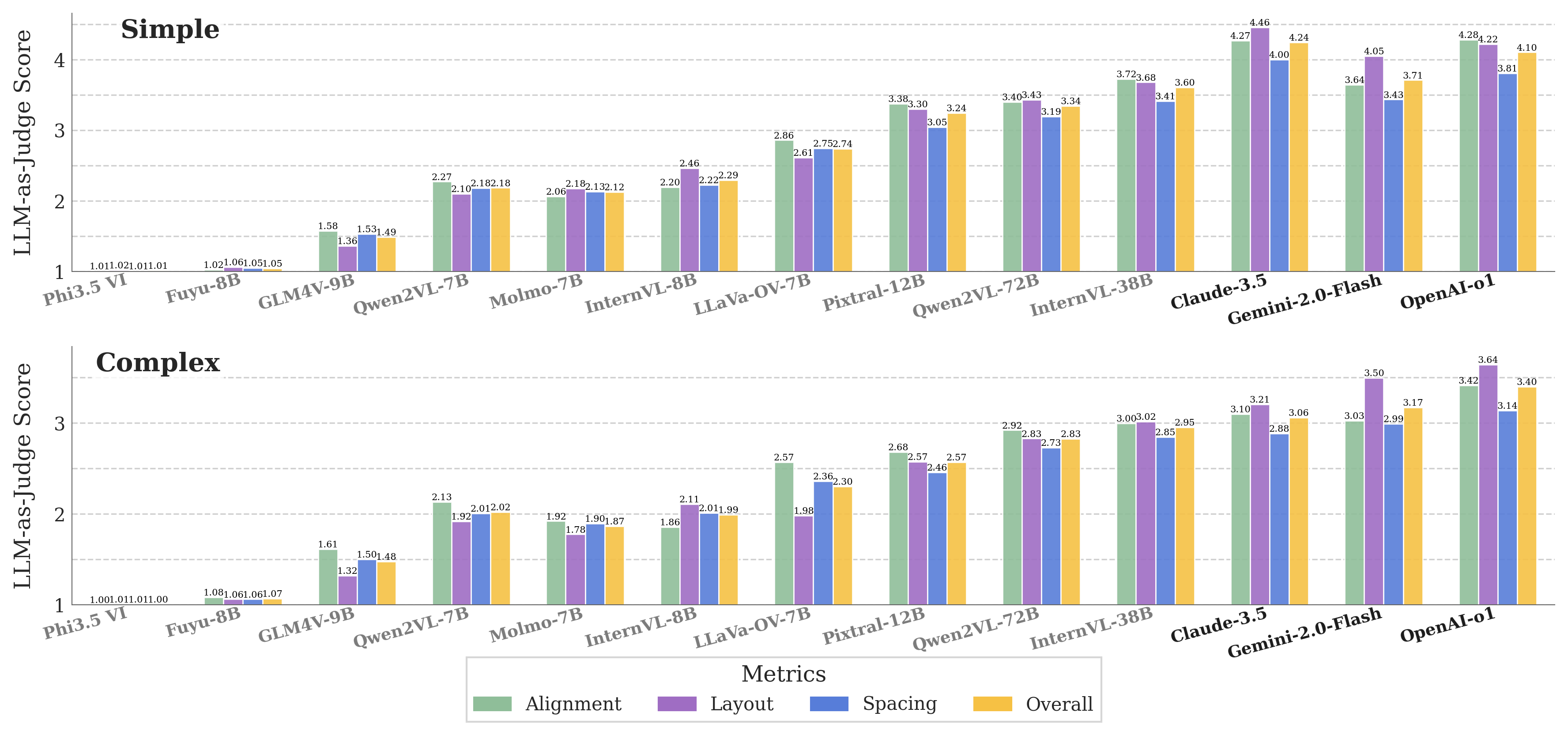

Mockup2Code evaluates how well models can generate HTML/CSS code from hand-drawn or digital web mockups. Models are scored on a 1-5 scale for both visual similarity (how well the generated code matches the visual design) and code quality (cleanliness, maintainability, and best practices). While proprietary models perform well on simple layouts, all models struggle with complex or deeply nested UI structures.

Code Editing tests whether models can make precise, functional changes to real website code based on user instructions. Models are evaluated on correctness (does the code work as intended?) and functionality (does it preserve existing features?). Despite advances in code generation, no model reliably produces correct, ready-to-use code edits—manual fixes are still required for production use.

Grounding Is the Hardest, Reasoning Comes Next, General Understanding Is the Easiest

WebMMU shows a clear difficulty gap across tasks. Most models handle basic visual understanding (like reading labels and identifying images) fairly well. They do worse at multi-step reasoning, such as performing calculations or combining information from different parts of a web page. But the hardest challenge is grounding — identifying the exact location of elements on a page and reasoning about user actions (e.g., where to click). For example, while many models could list navigation categories correctly, few could pinpoint where to click to open the "About Us" page, with grounding accuracy often falling below 10%. This reflects a gap between recognizing content and understanding how users interact with it.

Simple Layouts Are Fine, But Complex UI Hierarchies Break the Models

When turning design mockups into HTML/CSS code, most models succeed on simple, flat layouts. But as soon as mockups include nested sections, multi-column layouts, or complex styling, the models break down. They often flatten the hierarchy, misalign elements, or miss relationships between components. For example, while they can correctly generate a basic "Contact Us" page, they struggle with a product page featuring sidebars, filters, and product grids. This suggests current models understand basic page layouts but lack deeper comprehension of modern, structured web design.

Code Editing: Models Generate Edits, But Risk Breaking the Site

In code editing tasks, models can follow instructions like "Add a header with search and post buttons," but often produce edits that break the site's structure or behavior. While the syntax of their HTML/CSS/JavaScript is mostly correct, they miss subtle dependencies, like class names or JavaScript functions, that keep the page functional. Even top models cannot yet generate reliable, ready-to-deploy code patches. This makes human review essential for all but the simplest edits.

Open-Source Models Lag Behind Closed-Source Models, Especially on Complex Tasks

Across all tasks, closed-source models like Gemini 2.0 Flash and Claude 3.5 consistently outperform open-source alternatives. They show better grounding, more accurate reasoning, and higher-quality code generation. For example, in the mockup-to-code task, closed-source models score above 4 out of 5 on simple layouts, while open-source models often struggle to score above 3. However, even the best models — closed or open — fail on complex designs, especially with nested layouts and precise spacing. Open-source models also suffer from greater multilingual performance drops, highlighting the training and resource gap between the two categories.

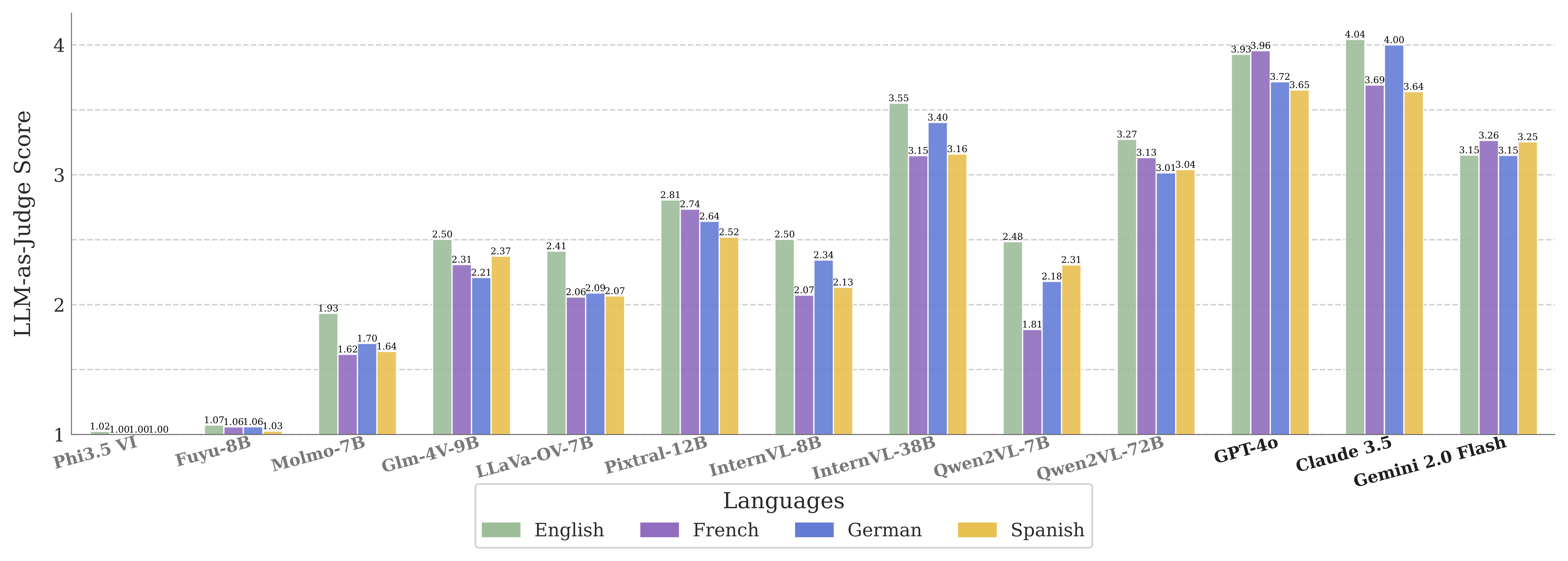

Multilingual Tasks Expose Major Gaps in Cross-Lingual Generalization

WebMMU covers English, Spanish, German, and French. Across all tasks, performance in non-English languages drops significantly — sometimes by more than half. Grounding and reasoning suffer most in these languages. This reveals that despite large training datasets, models haven't yet learned to generalize well to multilingual websites, which often have layout and content differences across languages.

The Big Picture: Real-World Web Automation Remains a Challenge

WebMMU shows that while AI models are progressing, they remain far from automating real-world web development. They can extract basic information and generate simple UI code, but they struggle with reasoning, structured code generation, precise edits, and multilingual scenarios. Closing this gap will require better multimodal reasoning, web-specific model architectures, and stronger cross-lingual capabilities — essential steps toward building truly intelligent web automation agents.